by

After upgrading my homelab to the latest vSphere 7.0 Update 2a, I was looking forward to kicking the tires on the highly anticipated vSphere with Tanzu Virtual Machine Service capability. Both Oren Penso and Myles Gray have both done a fantastic job on their respective blogs here and here demo’ing the new VM Service.

While browsing through Oren’s Github repo since I came across his blog post first, a couple of things quickly caught my attention. The first was a reference to OvfEnv transport with the YAML manifests and the second was that he was able to deploy an Ubuntu VM, which is interesting since only CentOS is currently officially supported. Why was this interesting? Well, with these two pieces of information, I had a pretty good theory on how the guest customizations were being passed into the GuestOS for configuration and this gave me an idea 🤔

I decided to put my hypothesis to the test and try out the VM Service and deploy one of my Nested ESXi Virtual Appliance and as you can see from the tweet below, it worked! 🤯

Disclaimer: vSphere with Tanzu and the VM Service currently only officially supports CentOS images for deployment, other operating systems are currently not supported. This is primarily for educational and experimentation purposes only.

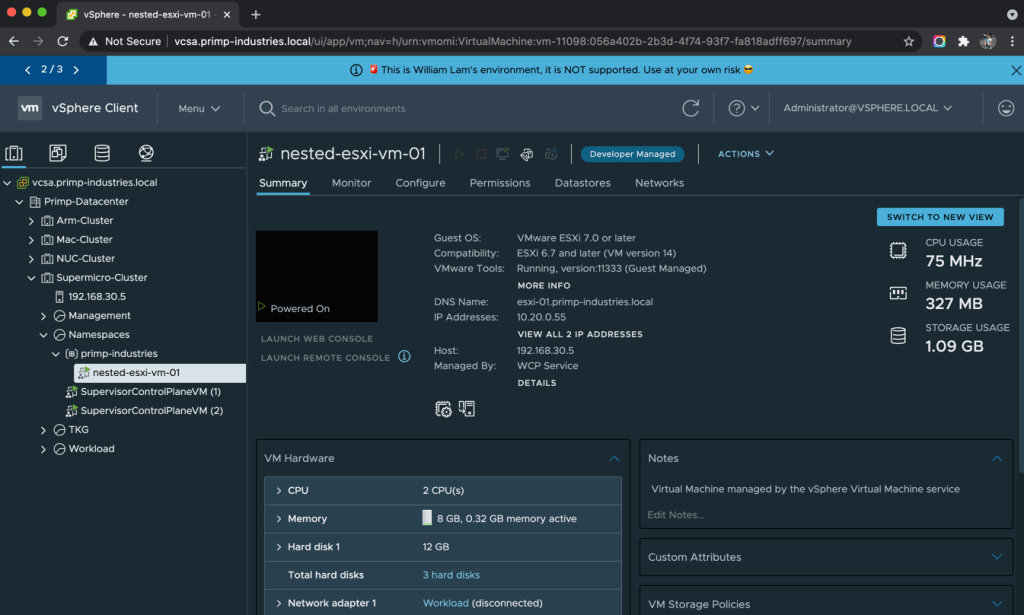

Although the deployment and configuration of my Nested ESXi VM works, I did run into one issue was due to way the guest customization engine currently works today. The network adapter of the VM is disconnected and when it boots up, VMware Tools will attempt to customize the VM using the LinuxPrep (only supported with small set of Linux OSes) and upon a successful customization, it will then reconnect the network adapter.

Since my Nested ESXi Appliance implements its own customization method, which can apply to other virtual appliance form factor through the use of a custom guest OS script, an unexpected behavior occurred where VMware Tools was unsuccessful in the customization and because of that, the network adapter of my VM was never reconnected. In speaking with one of the Engineers on the VM Service team, I came to learn about this behavior but I also found out that it would also be possible to add other types of guest customization making the the VM Service quite extensible and for my particular solution, a potential reality in the future 😀 I also learned that he had gone through a few of my OvfEnv blog articles while developing this feature which now totally makes sense now since I did very little to make this work!

Although there is not a workaround today for handling the disconnected network adapter, I did recently come across Florian Grehl blog post on how to migrate a Supervisor Control Plane VM which shows how to access the the VM Operator account to login to the vSphere UI, which would then give users the ability to reconnect the network adapter since VMs that are provisioned by the VM Service are managed and can not be accessed by normal administrators.

For those interested in trying out this and potentially applying this concept to other solutions, which was really my initial goal to understand how this works and I just happened to use my Nested ESXi Appliance to validate my assumptions, you can follow the instructions below.

Step 1 – Import the desired Nested ESXi OVA into a new vSphere Content Library. One important thing to understand is that the Content Library Item name must be DNS-compliant, this a Kubernetes (k8s) requirement and if you happen to use upper case or other non-supported characters, the VM Service will not be able to deploy. This certainly has an implication for existing VM images that you may have and will require a cloning of the item to meet this requirement.

Step 2 – Associate both the vSphere Content Library from the previous step along with VM Classes, either pre-defined ones or create your own to the vSphere Namespace where you intend to deploy the Nested ESXi VMs to.

Step 3 – Using kubectl to login to your vSphere Namespace, you can run the following command to ensure that your Nested ESXi image is now showing up (this can take a minute or so) and it should also list the image is not supported as shown in the screenshot below:

kubectl get vmimage -o wide

Step 4 – Create a VM deployment manifest file named nested-esx-01.yaml (you can name it anything) which contains the following and you will need to update the various parameters in the spec based on your environment configuration.

Hints:

- imageName – Use kubectl get vmimage to list the available VM images

- className – Use kubectl get vmclassbinding to list the available VM Class mappings

- storageClass – Use kubectl get sc to list the available storage classes

- networkName – Use kubectl get network to list the available

apiVersion: vmoperator.vmware.com/v1alpha1

kind: VirtualMachine

metadata:

name: nested-esxi-vm-01

labels:

app: nested-esxi-vm-01

annotations:

vmoperator.vmware.com/image-supported-check: disable

spec:

imageName: nested-esxi7.0u2a-appliance-template-v1

className: best-effort-medium

powerState: poweredOn

storageClass: vsan-default-storage-policy

networkInterfaces:

– networkType: vsphere-distributed

networkName: workload-1

vmMetadata:

configMapName: nested-esxi-vm-01

transport: OvfEnv

—

apiVersion: v1

kind: ConfigMap

metadata:

name: nested-esxi-vm-01

data:

guestinfo.hostname: esxi-01.primp-industries.local

guestinfo.ipaddress: 10.20.0.55

guestinfo.netmask: 255.255.255.0

guestinfo.gateway: 10.20.0.1

guestinfo.dns: 192.168.30.2

guestinfo.ntp: pool.ntp.org

guestinfo.domain: primp-industries.local

The key insight that I got from one of Oren’s example was the use of a special annotation vmoperator.vmware.com/image-supported-check: disable which basically disables the image check by the VM Service and thus allowing us to deploy a non-supported OS image. After that, the rest was pretty straight forward and I even provide some hints on how to look up some of the paraemters as these are mappings created between vSphere with Tanzu and the underlying vSphere infrastructure.

The last section of the manifest is creating a K8s Config Map (CM) which contains the input values to what you would normally populate when deploying using the vSphere UI or say PowerCLI or OVFTool, which are the OVF property key and values. This is where the lightbulb moment should click for most of you who are familiar with automating OVF/OVA deployments that the VM Service has been designed in a very familiar manner making the consumption experience pretty straight forward.

Step 5 – Once you have saved your changes in the YAML file, we can deploy our Nested ESXi VM by running the following command

kubectl apply -f nested-esxi-vm-01.yaml

If everything was configured correctly, you should see a task kick off and the VM Service now deploying a Nested ESXi VM with all the customizations that was provided as part of the deployment manifest!

Using kubectl, you can also retrieve the IP Address of the VM by using the following command:

kubectl get vm nested-esxi-vm-01 -o jsonpath='{.status.vmIp}’

For the first release of the VM Service, I was pretty impressed at how far I could push it beyond what is officially supported today. To be frank, I really did not have to do too much to add my particular use case and I suspect with future enhancements, the guest customization engine can be quite flexible to support various other methods. From a debugging and troubleshooting standpoint, it was not trivial as the VMs deployed by the VM Service are all managed by the service and this means even for administrators, you can not access the VM Console which may be required for development and/or debugging purposes. One other thing I had noticed was that although my Nested ESXi Appliance image was configured with two network adapters, only a single one was created during the clone operation, not sure if this was a limitation of the solution today or if I was missing something in the manifest. I suspect over time, there will be other vSphere VM capabilities that will need to be added to the VM Service for customers who take advantage of specific VM features. I look forward to seeing what the next revision of this solution will look like.